As the uptake of cloud services increases, cybercriminals are more interested than ever in exploiting vulnerabilities to attack cloud services and it’s customers. If your organisation is using cloud services, it’s important to recognise the shared responsibility model where the Cloud Service Provider (CSP) and the client share certain responsibilities, including cybersecurity. The CSP, such as AWS, Google Cloud, or Microsoft Azure, is responsible for securing the underlying services, whereas the client is responsible for the security of any cloud services that are configured and deployed. Therefore, cloud-focused penetration testing can help your organisation to fulfil that responsibility. So what are the benefits of cloud penetration testing, and how does it differ from a standard pen test?

What exactly is cloud penetration testing?

Cloud penetration testing is a simulated attack where offensive security tests are performed to find exploitable security flaws in the cloud-native infrastructure before cybercriminals do. The primary goal of this form of testing is to assess an organisation’s cybersecurity posture within the cloud environment, prevent avoidable breaches in the system, and remain compliant with industry regulations.

Effective cloud penetration testing involves more than just leveraging an automated scanner. It also employs human skills to examine those flaws, simulate an attack, and determine how the security vulnerabilities in your cloud network could result in actual data compromise. Cloud penetration testing will help organisations learn about the strengths and weaknesses of their cloud-based architecture, consequently safeguarding the company’s data and intellectual properties, finances, and reputation more effectively.

What’s the difference between cloud penetration testing and traditional penetration testing?

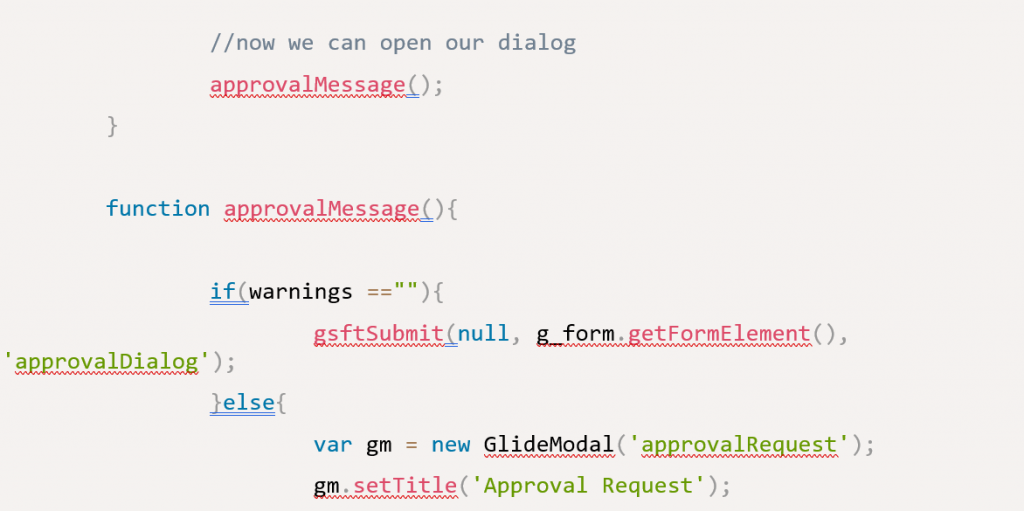

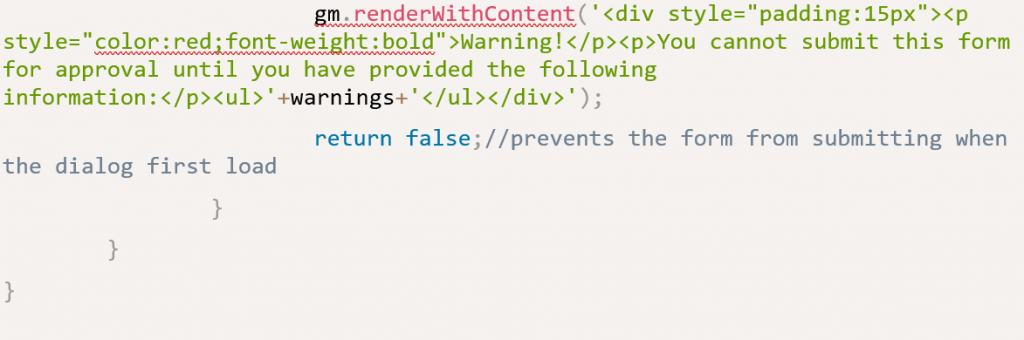

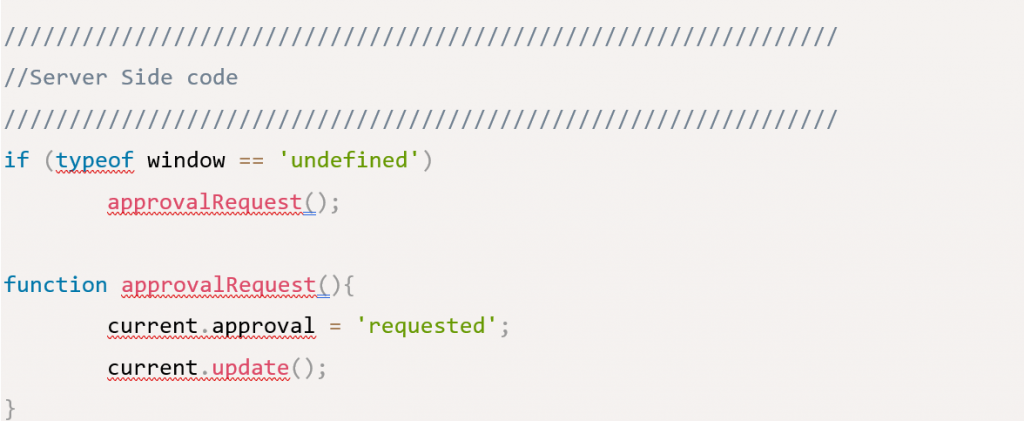

Although cloud penetration testing applies the principle of traditional on-premise penetration testing, there is a major difference in regard to the approach and environment of testing. This is due to the fact that services in the Cloud are configured and operate differently than in an on-premise infrastructure. Depending on the type of cloud service and the provider, different manual approaches and cloud penetration testing tools may be used.

Furthermore, the cloud environment comes from a CSP. These providers have unique and specific guidelines when it comes to conducting a pen test on their cloud service, which you must follow.

Common security vulnerabilities in the Cloud

Some of the most common vulnerabilities that cloud penetration testing can identify include:

- Misconfigured accounts, access lists, and buckets: Misconfigurations of accounts, access lists, and data containers are the most common vulnerabilities that can lead to a compromise of cloud security. Overly-permissive accounts or containers will violate the principle of least privilege, and therefore potentially result in data disclosure.

- Weak authentication, credentials and identity management: Accounts with weak authentication mechanisms allow the attacker to gain a foothold into the cloud system much easier. This compromises all of the information that those accounts can access, and if the least privilege is not strictly implemented, a deeper compromise is inevitable.

- Data breaches: Another frequent method to compromise the Cloud is harvesting publicly exposed credentials for cloud accounts. An effective cloud penetration test can assist in identifying sensitive information in publicly available repositories, discover the likely repercussions, and provide advice on how to strengthen that aspect of your security posture.

- Insecure interface and APIs: The attacker often scrapes the cloud infrastructure to identify any weak links that could help them to gain a foothold in the system. An experienced cloud pen tester will explore and identify those insecure entries before the cybercriminals are able to exploit them.

Why do you need regular cloud penetration testing?

As cloud services continue to offer new technologies to encourage businesses to move their workload to the Cloud to achieve agility, time and cost efficiency, attackers are also adjusting to changes in the cloud landscape. Therefore, the security risks associated with cloud-based systems and services are evolving rapidly. This stresses the importance of why cloud pen testing should be conducted more frequently than standard on-premise penetration testing. A skilled penetration tester will provide you with useful guidance on how to fix any security flaws found during the test, allowing you to improve your cloud security moving ahead.

Moving forward with a trusted cloud penetration testing partner

Almost every modern organisation is using cloud services, but the majority lack the tools, methodologies, or experts at hand to conduct a cloud pen test. Partnering with an experienced cloud security provider can bring your cloud platform closer to where it needs to be from a security standpoint.

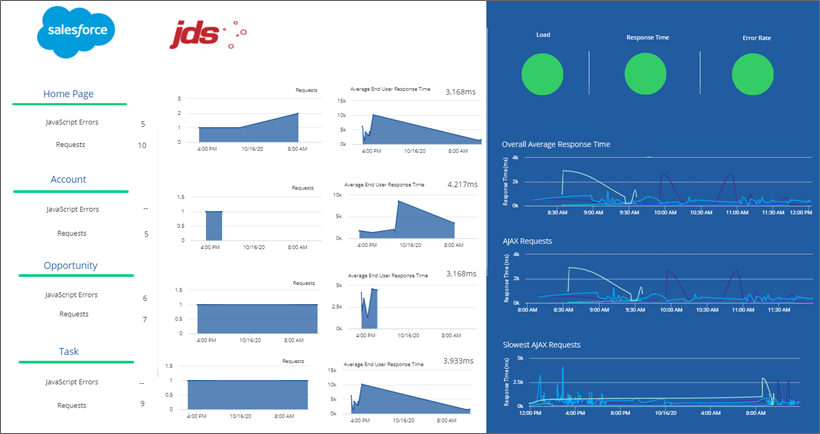

JDS Security has the experience and expertise to defend your business in the Cloud, with deep and unmatched knowledge of AWS, Azure, and Google Cloud services to help reach your cloud and digital transformation goals securely.