Twice a year, RMIT publishes course results for its students on the student portal of the university’s website. These two days in the calendar generate the highest volume of website traffic. With such huge spikes experienced in a 24-hour period, RMIT’s student portal was being saturated and not handling the volume effectively. This resulted in poor performance and students reported difficulties in accessing their results in a timely fashion.

Tim Ash, RMIT’s senior production assurance and test manager, explains, “The single most important aspect of our students’ lives, apart from the education they receive, is knowing how well they are doing in their studies. Having a stable student portal is critical to satisfy our students’ needs and deliver a high level of service. It also impacts on our credibility and future success.

“For this reason, we identified performance testing as an essential part of future application delivery. With limited resources available in-house and a tight deadline, we invited JDS Australia (JDS), an HP Platinum Partner, to help us to predict future system behaviour and application performance."

Objective

Predict system behaviour and application performance to improve student satisfaction

Approach

RMIT University verified that its new critical student results application (called MyResults) met specified performance requirements

IT improvements

- Achieved 100 percent uptime on MyResults

- Delivered student results 60 per cent faster than predicted

- Gained a true picture of end-to-end performance of MyResults

- Emulated peak loads of 20,000+ student logins per hour – more than six times the average loads

- Uncovered and rectified functional issues prior to going live

- No helpdesk complaints logged for poor system performance

- Optimisation opportunities were identified that enabled the student portal to be scaled more appropriately

About RMIT University

As a global university of technology and design, RMIT University is one of Australia’s leading educational institutions, enjoying an international reputation for excellence in work-relevant education.

RMIT employs almost 4,000 people and has 74,000 students studying at its three Melbourne campuses and in its Vietnam campuses. The university also has strong links with partner institutions which deliver RMIT award programmes in Singapore, Hong Kong, mainland China, and Malaysia, as well as research and industry partnerships on every continent.

RMIT has a broad technology footprint, counting its website and student portal as mission-critical applications essential to the day-to-day running of the university.

Industry

Higher Education

Primary applications

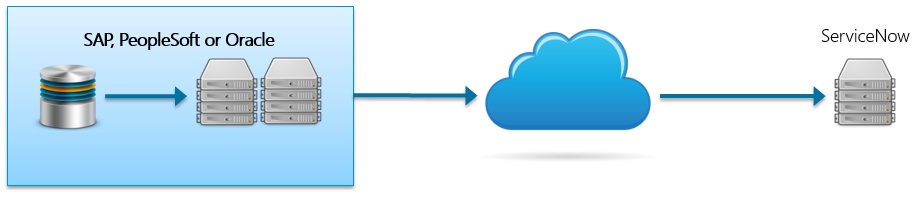

- SAP Financials

- PeopleSoft Student Administration

Primary software

- HP LoadRunner software

- HP Quality Center

- HP QuickTest Professional software

Selecting an industry standard

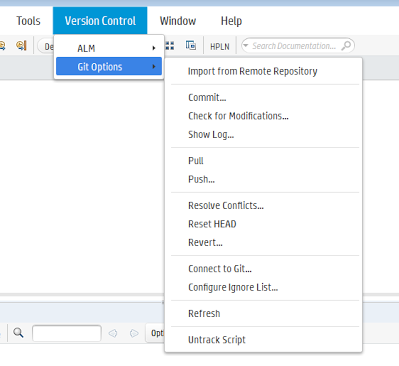

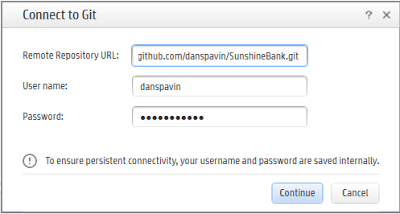

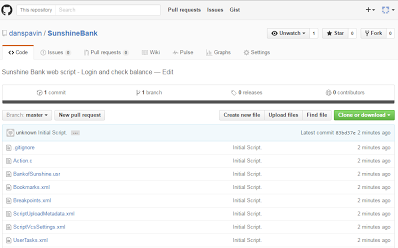

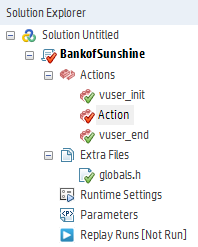

A long-term user of HP software including HP Quality Center, HP QuickTest Professional and HP Business Availability Center, RMIT chose HP LoadRunner software, as its performance validation platform.

“HP LoadRunner is the industry standard for performance testing,” explains Tim. “It was a natural choice for RMIT, due to its functionality and integration capabilities as well as our investment in other HP software.”

Predicting system behaviour

In seeking to prevent future student portal performance problems, RMIT had identified a number of potential solutions.

Tim explains, “Due to the complex nature of our student portal, it was unclear which solution design would provide the best performing architecture. HP LoadRunner was used to obtain an accurate picture of end-to-end system performance by emulating the types of loads we receive on result days. We tested all the options. Our objective was to handle the loads and satisfy 100 percent of our students’ results queries in under five seconds.”

Emulating loads

By leveraging the experience of JDS, HP LoadRunner was used to emulate peak loads of 20,000+ student logins per hour, which is more than six times the average loads experienced on non-results days. “The data from our tests revealed that we needed our student portal platform to have the ability to scale considerably, to handle traffic up to six times the usual volume on result days,” explains Tim. “As we drove loads against the various design options, we also captured end-user response times.

“Based on this, we selected the design solution that had the best performance. There were several options to choose from with some going through a portal which put unnecessary load on the system. The brand new application chosen is called MyResults, which, during testing, met our performance objective and delivered response times in less than two seconds.”

The JDS account manager for the case says, “As well as testing the performance of the various design solutions, optimisation opportunities were identified that enabled the student portal to be scaled more appropriately. Using HP LoadRunner, JDS consultants identified bottlenecks in the application and platform. By working with RMIT, they were able to direct efforts to remediate the problems prior to going live.”

Successful go-live drives student satisfaction

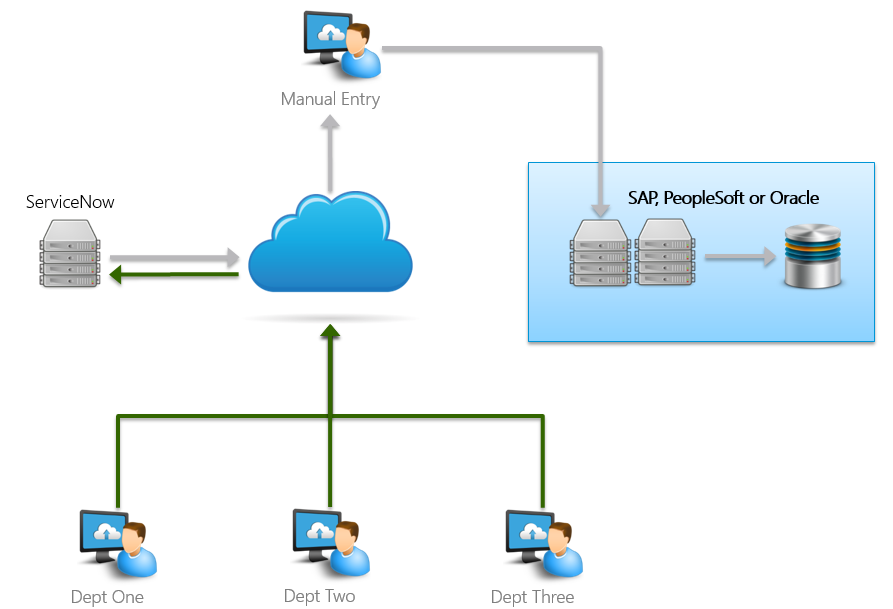

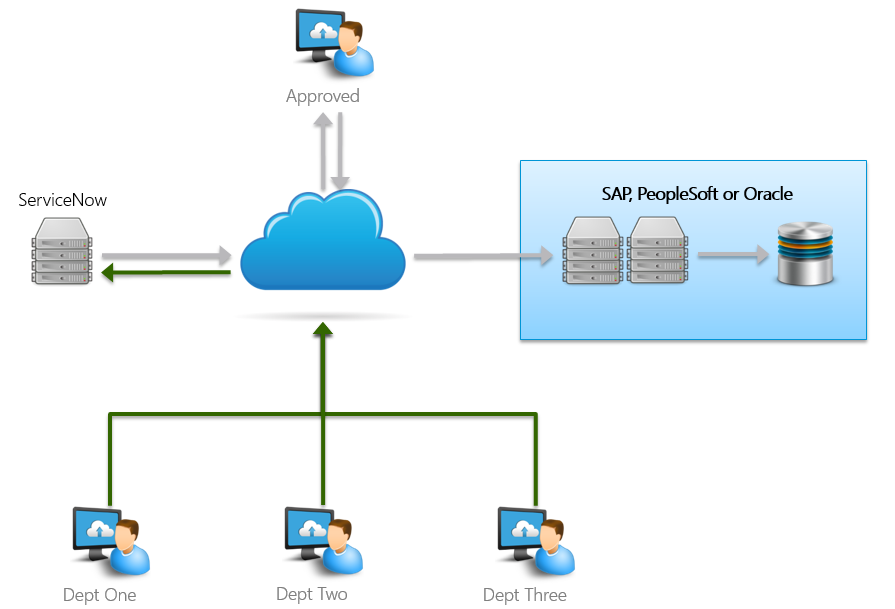

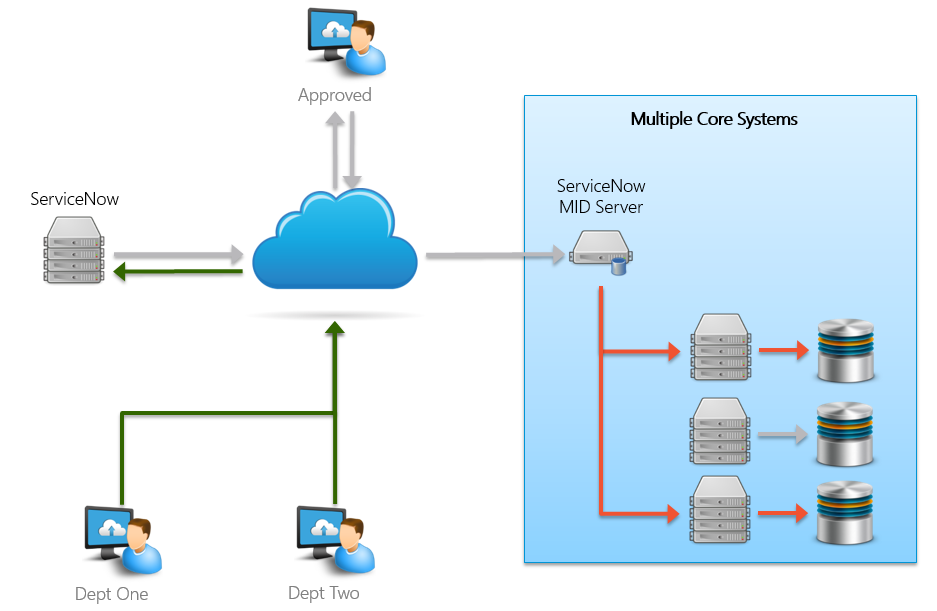

While MyResults is a simple application that delivers results, its structure is quite complex as it receives inputs from various databases. Ensuring it would perform as intended on results day was key.

Tim says, “By using HP LoadRunner, we significantly decreased the risk of deploying an application that would not meet our performance requirements. On results day, MyResults proved an outstanding success. It handled the loads and spikes extremely well, consistently delivering results in timeframes sub-two seconds. This would not have happened if we had not validated performance beforehand.

“Our students certainly noticed the difference. We received a number of tweets praising the system’s performance. Many of our students couldn’t believe how quickly they obtained their results. Another great indication of our success was the low impact on our helpdesk. They didn’t receive complaints or issues regarding the system and that’s a big plus.”

Boosting reputation

As a result of deploying HP LoadRunner to validate the performance of MyResults, RMIT has realised considerable benefits. The institution has facilitated better decision-making as information is more readily available, and experienced operational efficiencies.

Tim says, “We are delighted with the outcomes of HP LoadRunner. First and foremost, we rectified poor performance issues to provide a results system that exceeded our own goals and those of our students. Thanks to the preparative measures we put in place, our system thrived and delivered 100 percent uptime. This enabled us to provide a high-quality student experience, which culminated in increased user satisfaction.

“Operationally, HP LoadRunner helped us to identify the most suitable option to improve our performance. It gave us confidence (prior to release) in MyResults’ ability and allowed us to make informed business decisions, which reduced our deployment risk. In addition, we saved money, because we were more efficient and did not experience any downtime. Plus, we were able to fix issues in development which is always a cheaper option, and we saved considerable time during testing by having the ability to re-use and repeat tests."

Business Benefits

- The university's reputation was boosted by providing students with reliable and fast access to results—students tweeted their satisfaction.

- Money was saved through having zero downtime and the ability to fix issues early.

- RMIT could make informed decisions and reduce the risk of deploying poor quality applications.

- University and student confidence in IT systems was improved.

Next steps

HP LoadRunner will continue to play a key role as the performance validation backbone of MyResults. Tim believes RMIT is certainly up-to-date with testing technology with the HP Solutions it utilises, as well as adoption of a stringent testing methodology.

“Students are early adopters of technology. So it’s logical that our next big push is mobility and wireless applications. More and more, our students are accessing RMIT’s website using mobile devices and we need to make sure our applications are optimised accordingly. We want students to be able to log in from home and attend a lecture from their laptop or access their results from their mobile phones. HP LoadRunner will be used to ensure we can meet the wireless requirements of the university’s future.

“Looking at the big picture, HP LoadRunner is an essential component of RMIT’s technology footprint. It allows us to perform due diligence on our applications, make go live decisions with confidence and provide statistical information that is trusted and relied upon by the institution.

“Ultimately, HP LoadRunner gives me peace of mind that RMIT’s systems will work as intended and deliver the quality of service our students and staff expect.”