SAP PowerConnect for Splunk is the only SAP-certified SAP to Splunk connector, “powered by SAP NetWeaver.” This solution runs inside SAP and extracts machine data, security events, and logs from SAP and ingests the information into Splunk in real time. As an SAP partner and the sole Australian implementation partner for SAP PowerConnect for Splunk, JDS Australia can help you see what’s happening inside your SAP system, proactively report on trends, alert on incidents, and even enable you to predict what will happen in the future.

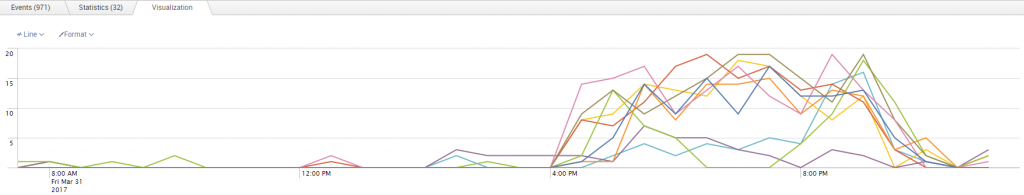

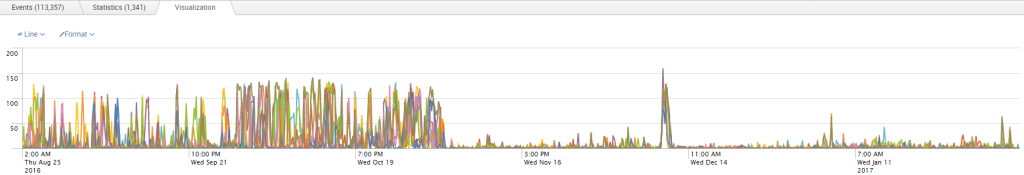

This screen shows standard performance metrics from within SAP:

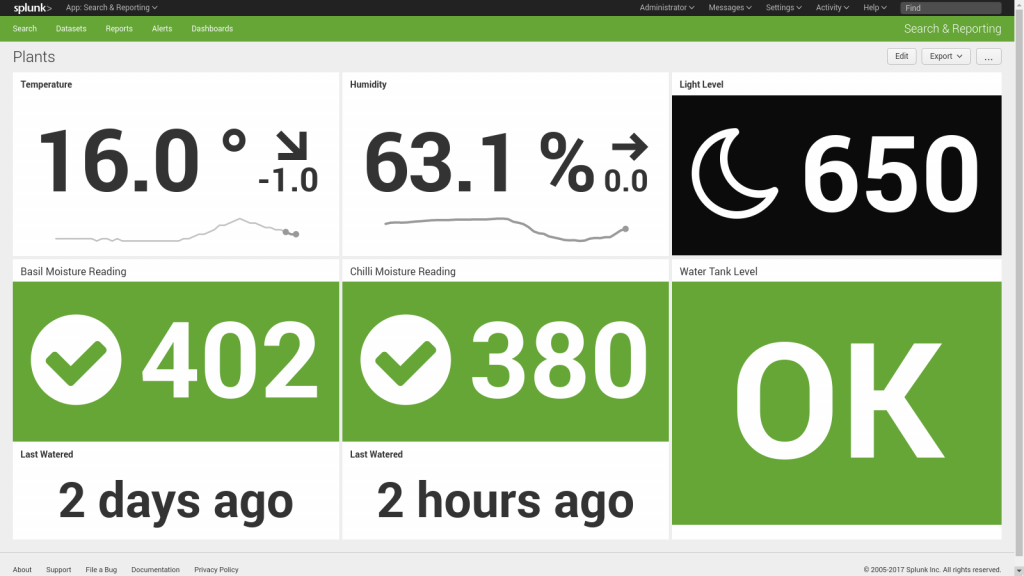

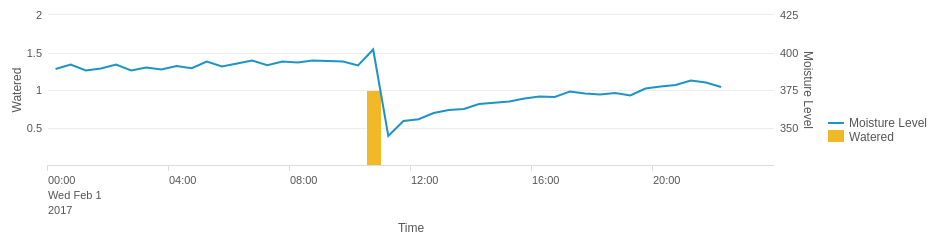

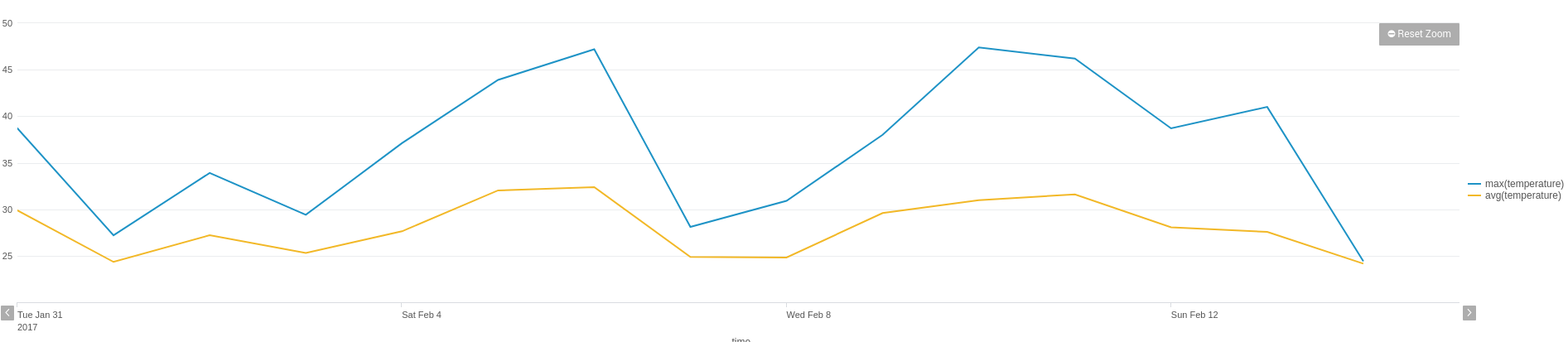

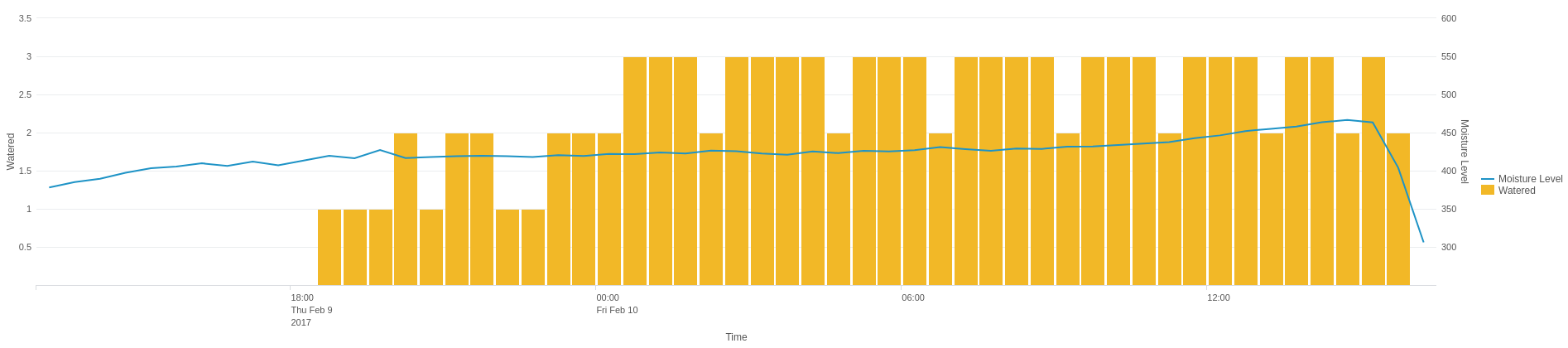

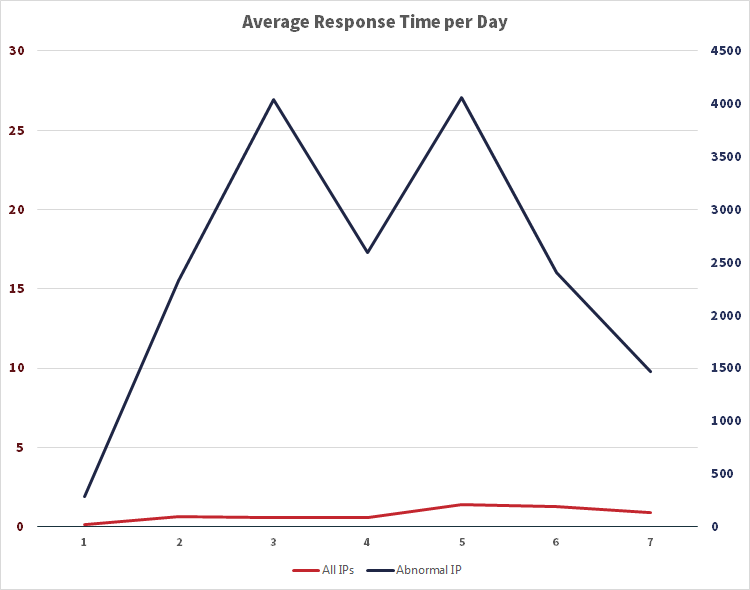

SAP PowerConnect for Splunk converts this tabular data and presents it on a highly visual dashboard:

Find out more about SAP PowerConnect for Splunk and how it can be a key enabler for your business in 2018 by attending our event, “Splunkify your SAP data with PowerConnect.” JDS and Splunk are co-hosting events in Sydney and Melbourne in May 2018. Light canapés and beverages will be served. Choose the most convenient location for you and register below. We look forward to seeing you there.