New technology terms are constantly being coined. One of our lead consultants answers the question: Is DevPerfOps a thing?

Hopefully it’s no surprise that traditional performance testing has struggled to adapt to the new paradigm of DevOps, or even Agile. The problems can be boiled down to a few key factors:

- Performance testing takes time—time to script, and time to execute. A typical performance test engagement is 4-6 weeks, during which time the rest of the project team has made significant progress.

- Introducing performance testing to a CI/CD pipeline is really challenging. Tests typically run for an hour or more and can hold up the pipeline, and they are extremely sensitive to application changes as the scripts operate at the protocol level (typically HTTP requests).

- Performance testing often requires a full-sized, production-like infrastructure environment. These aren’t cheap and are normally unavailable during early development, making “early performance testing” more of an aspirational idea, rather than something that is always practical.

All the software vendors will tell you that DevOps with performance testing is easy, but none of them have solved the above problems. For the most part, they have simply added a CI/CD hook without even attempting to challenge the concept of performance testing in DevOps at all.

A new world

So what would redefining the concept of performance testing look like? Allow me to introduce DevPerfOps. We would define the four main activities of a DevPerfOps engineer as:

- Tactical Load Tests

- Microbenchmarking

- APM and Code Reviews

- Container Workloads

Let’s start at the top.

Tactical Load Testing

This is can be broadly defined as running performance tests within the limitations of a CD/CI pipeline. Is it perfect? No. Is it better than not doing anything or struggling with a full end-to-end test? Absolutely, yes!

Tactical Load Testing has the following objectives:

- Leverage CI toolkits like Jenkins for execution and results collection.

- Use lightweight toolsets for execution (JMeter, Taurus, Gatling, LoadRunner, etc. are all perfectly good choices).

- Configure performance tests to run for short durations. 15-20 minutes is the ideal balance. This can be achieved by minimising the run logic, reducing think times, and focusing on only testing individual business processes.

- Expect to run your performance tests on a scaled-down environment, or a single server node. Think about what this will do to your workload profile.

Microbenchmarking

These are mini unit or integration tests designed to ensure that a component operates within a performance benchmark and that deviations are detected.

Microbenchmarking can target things like core application code, SQL queries, and Web Service integrations. There is an enormous amount of potential scope here; for example, you can write a microbenchmark test for a login, or a report execution, or a data transformation--anything goes if it makes sense.

Most importantly, microbenchmarking is a great opportunity to test early in the project lifecycle and provide early feedback.

APM and Code Reviews

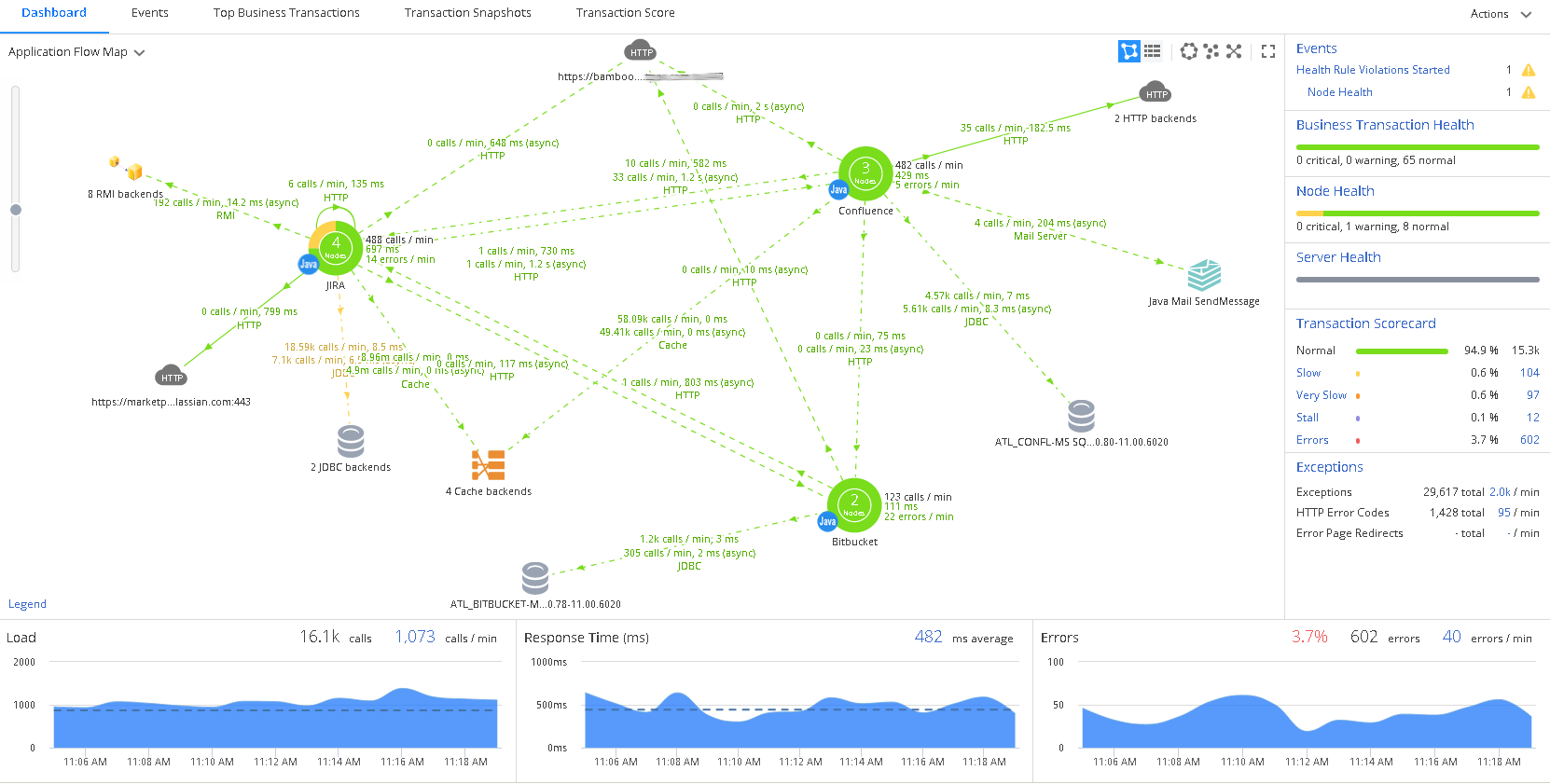

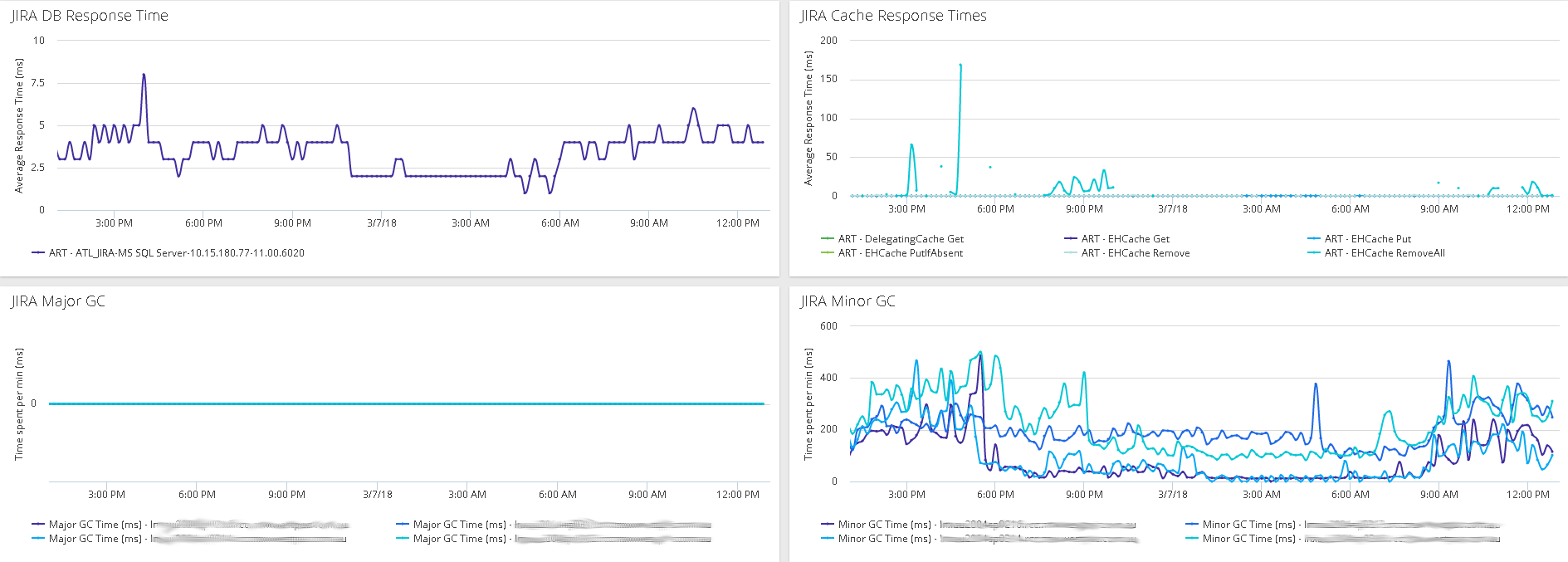

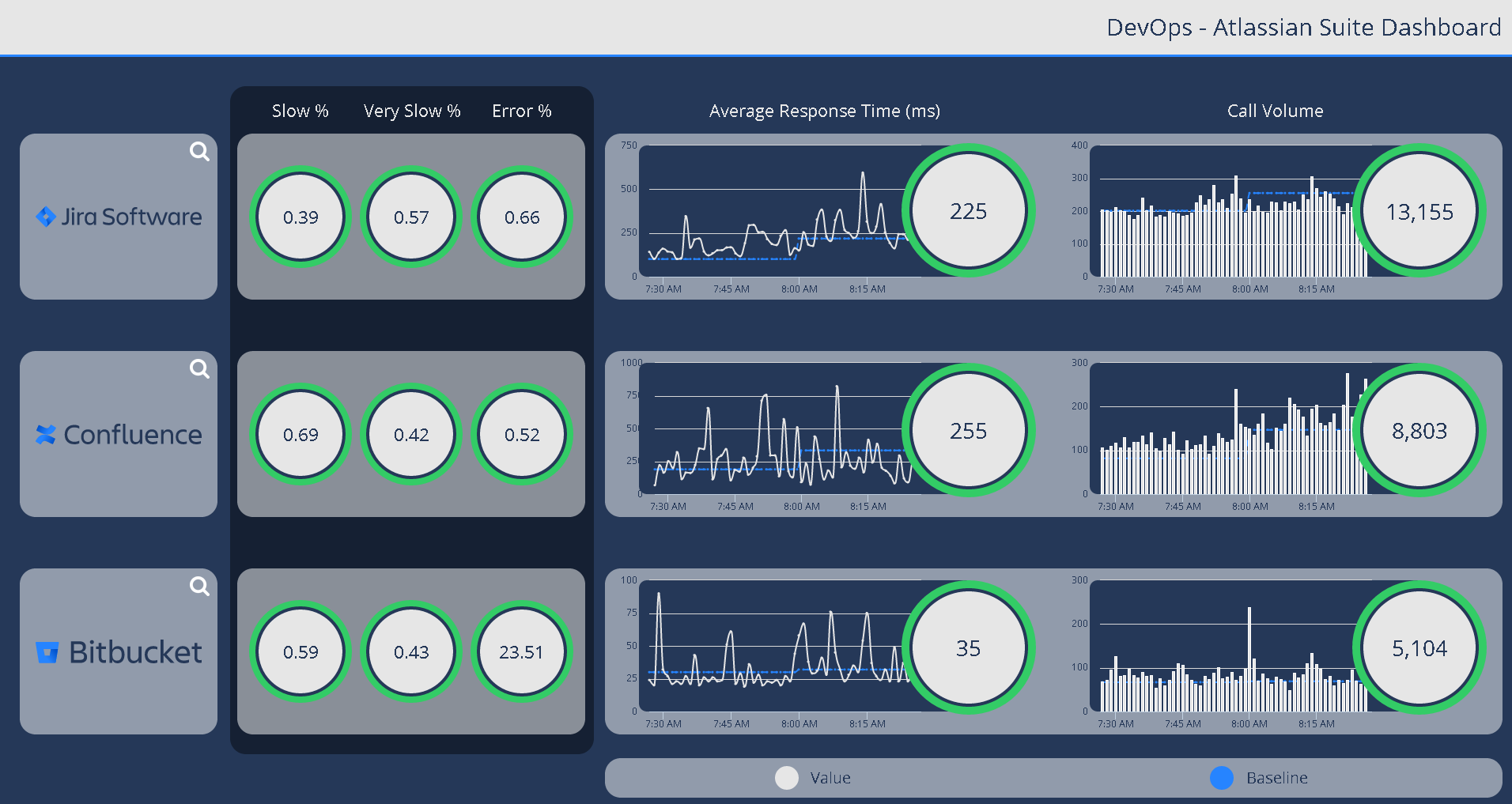

In past years, having access to a good APM tool or profiler, or even the source code, has been a luxury. Not anymore--these tools are everywhere, and while a fully featured tool like AppDynamics or New Relic is beneficial for developers and operations, a lot can be achieved with low-cost tools like YourKit Profiler or VisualVM.

APM or profilers allow slow execution paths to be identified, and memory usage and CPU utilisation to be measured. Resource intensive workloads can be easily identified and performance baked into the application.

Container Workloads

Containers will one day rule the world. If you don’t know much about containers, you need to learn about them fast.

In terms of performance engineering, each container handles a small unit of workload and container orchestration engines like Kubernetes will auto-scale that container horizontally across the entire data centre. It’s not uncommon for applications to run on hundreds or thousands of containers.

What’s important here is that the smallest unit of workload is tiny, and scaling is something that a well-designed application will get for free. This allows your performance tests to scale accordingly, and it allows the whole notion of “what is application infrastructure” to be challenged. In the container world, an application is a service definition… and the rest is handled for you.

So, is DevPerfOps a thing? We think so, and we think it will only continue to grow and expand from here. It’s time that performance testing meets the needs of 2018 IT teams, and JDS can help. If you have any questions or want to discuss more, please get in touch with our performance engineering team by emailing [email protected]. If you’re looking for a quick, simple way of getting actionable insights into the performance health of your website or application, check out our current One Second Faster promotion.

Our team on the case

Read more Tech Tips

Integrating Splunk ITSI and Observability Cloud for Unified Insights

Part Three: ServiceNow Hyperautomation – Process Mining

What is the difference between a Vulnerability Scan and a Penetration Test?

Manual Security Testing vs Automated Scanning?

Five Reasons Why Your Organisation Should Be Penetration Testing

Mastering Modal Dialog Boxes

Understanding your Customer Journeys in Salesforce with AppDynamics

JDS Australia works with numerous customers who utilise the force.com platform as the primary interface for their end users ...

Implementing Salesforce monitoring in Splunk

Glide Variables

Fast-track ServiceNow upgrades with Automated Testing Framework (ATF)

Is DevPerfOps a thing?

Monitoring Atlassian Suite with AppDynamics

5 quick tips for customising your SAP data in Splunk

How to maintain versatility throughout your SAP lifecycle

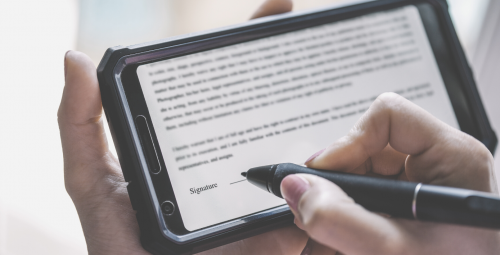

Implementing an electronic signature in ALM

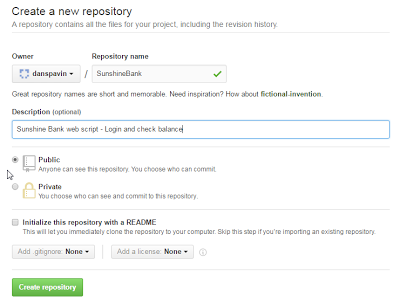

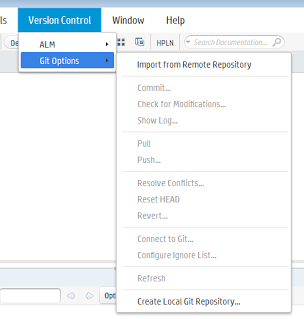

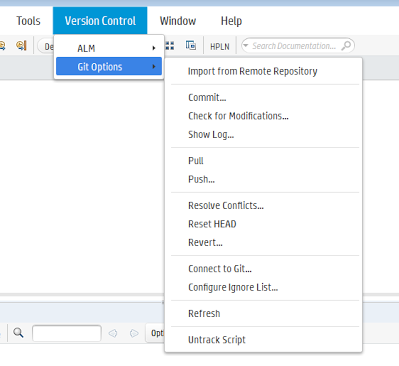

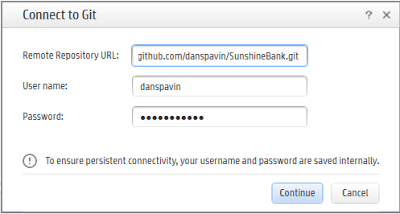

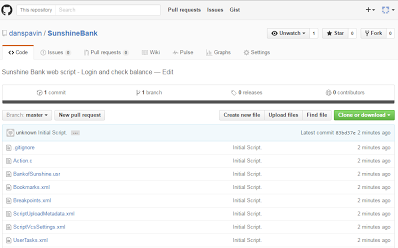

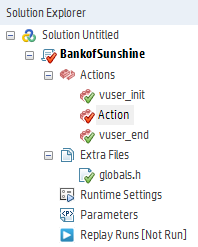

Vugen and GitHub Integration

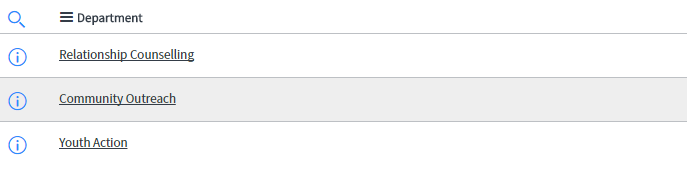

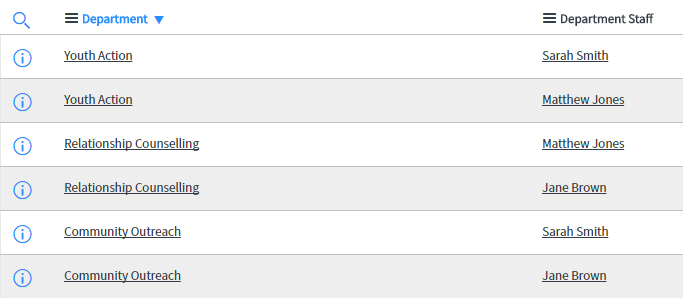

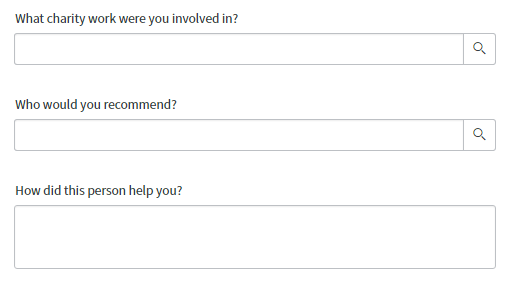

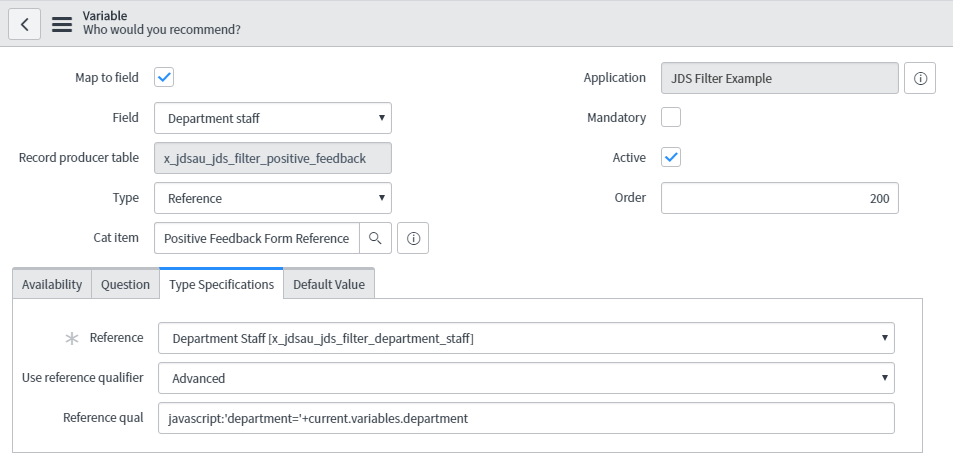

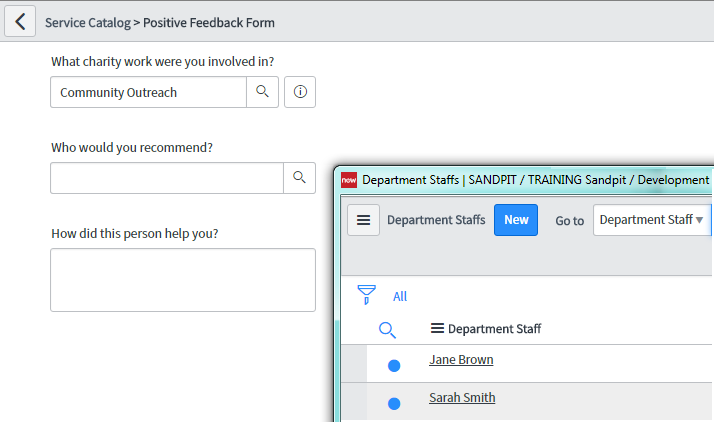

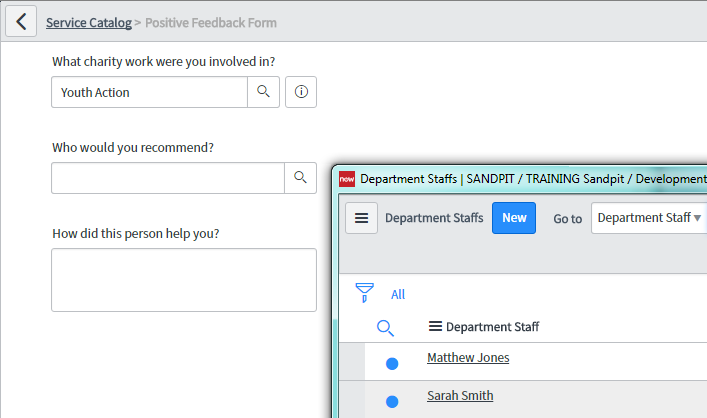

Filtered Reference Fields in ServiceNow

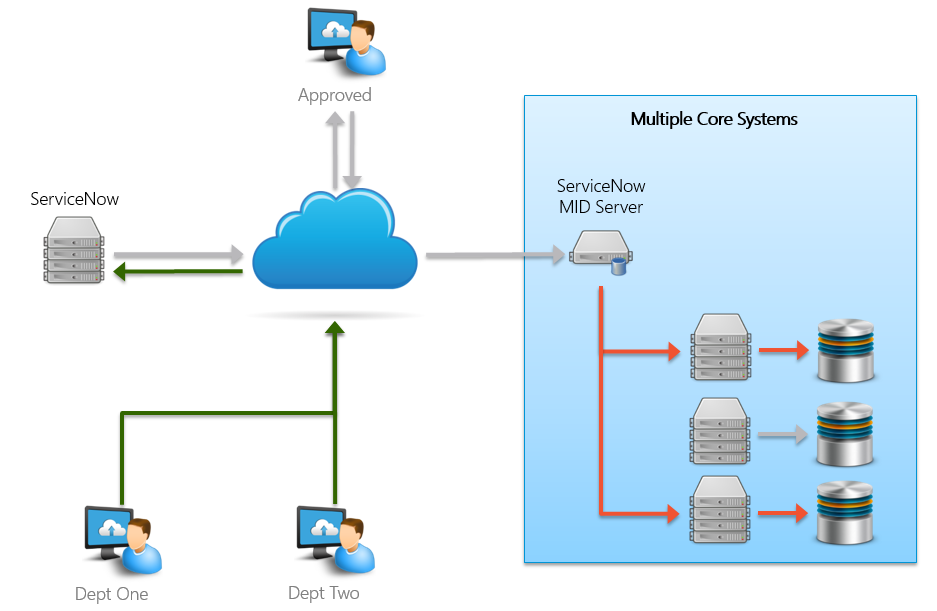

Straight-Through Processing with ServiceNow

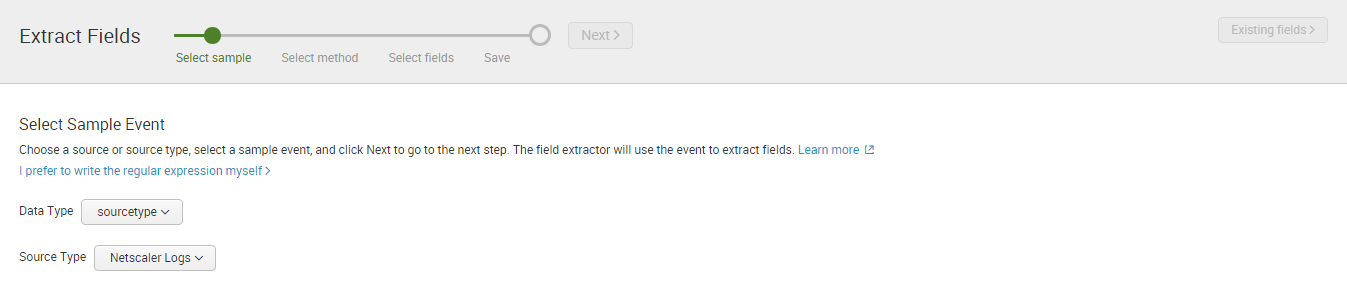

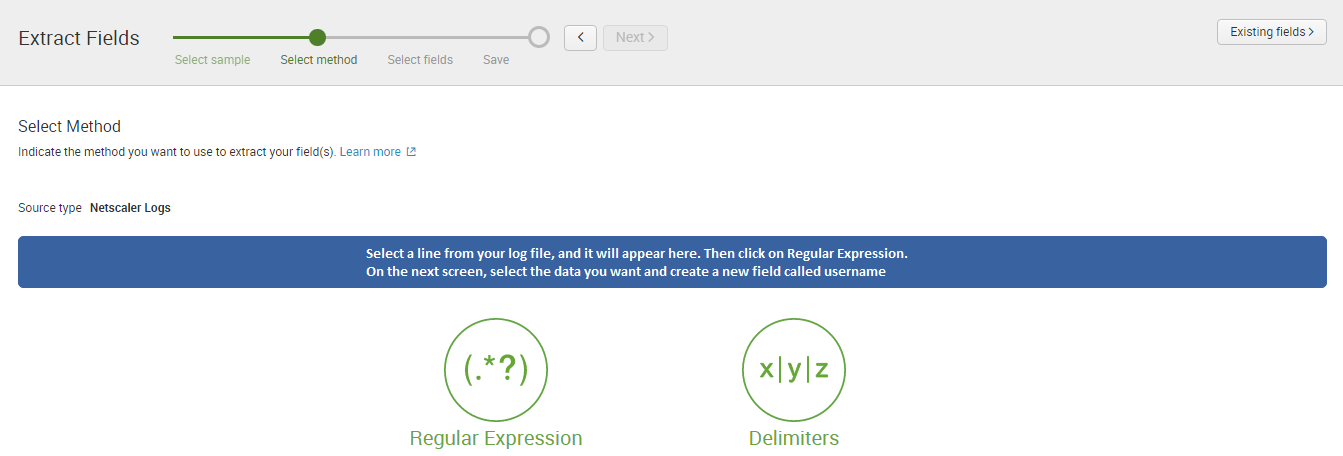

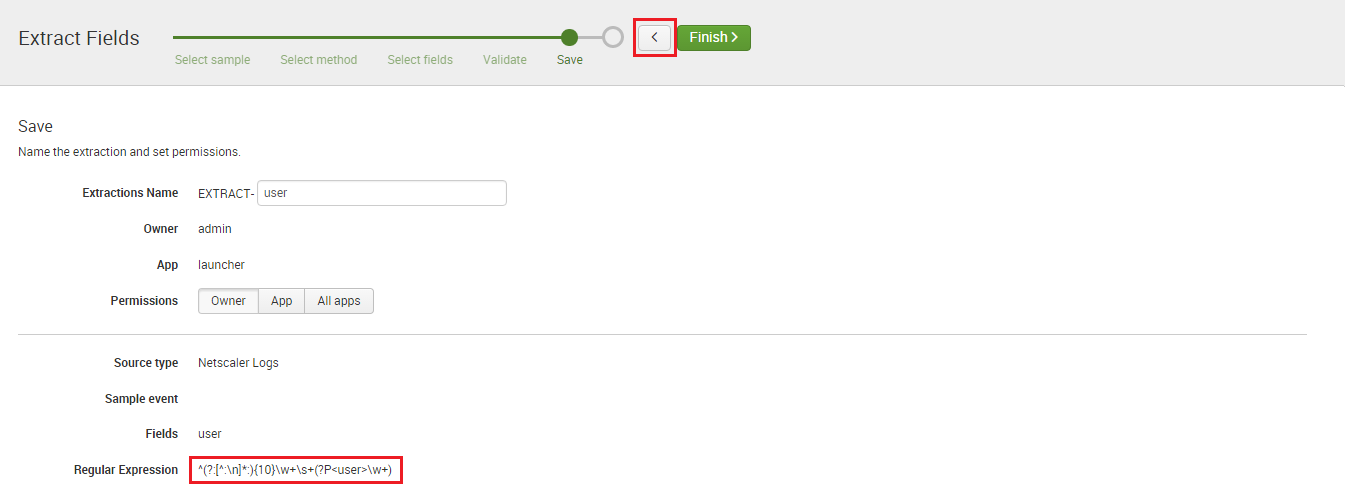

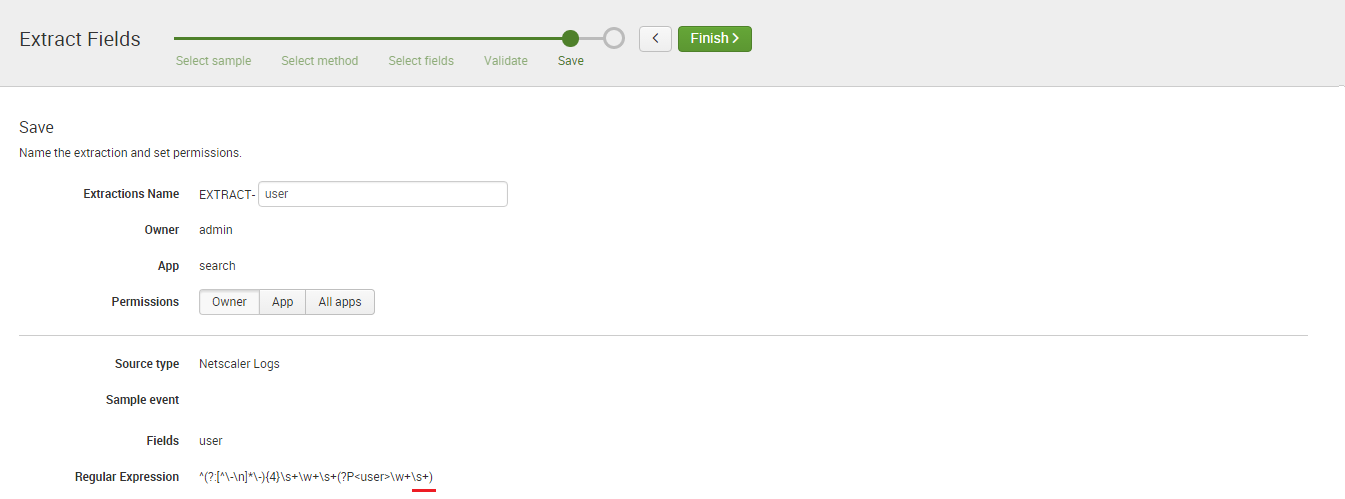

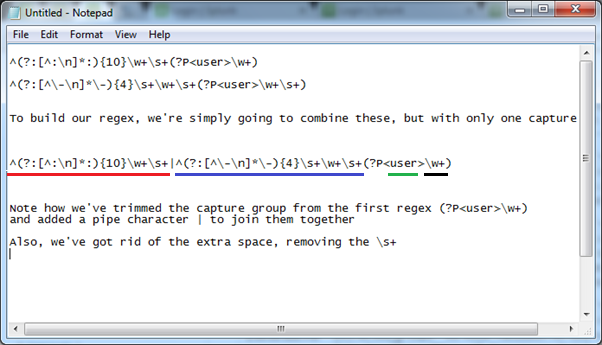

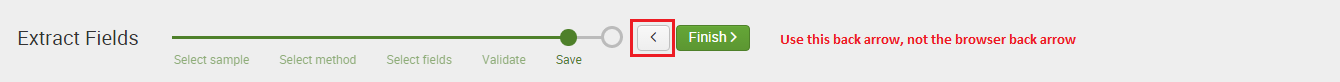

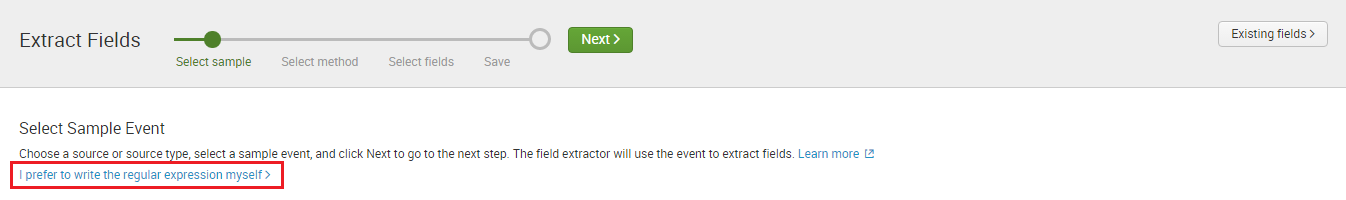

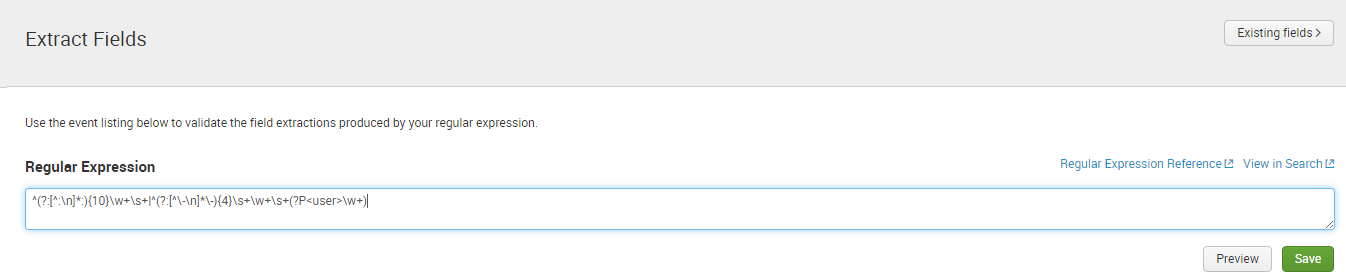

Splunk: Using Regex to Simplify Your Data